Vector Search with Azure AI Vision and PostgreSQL – MVP TechBytes

My presentation about vector search with Azure AI Vision and Azure Cosmos DB for PostgreSQL at the virtual show MVP TechBytes.

Have you ever found yourself in a situation where you remember what happened in a movie but struggle to recall its name and can’t come up with the right words to search for it? Or, maybe you’ve had that moment when you have a picture of a product you wish to purchase but aren’t sure how to find it online.

Unlike traditional search systems that rely on mentions of keywords, tags, or other metadata, vector search leverages machine learning to capture the meaning of data, allowing you to search by what you mean. Consider the second scenario from the paragraph above as an example. In a vector search system, you can simply use the image of a product to find similar ones instead of struggling to come up with the proper search words. This method is also known as “reverse image search.”

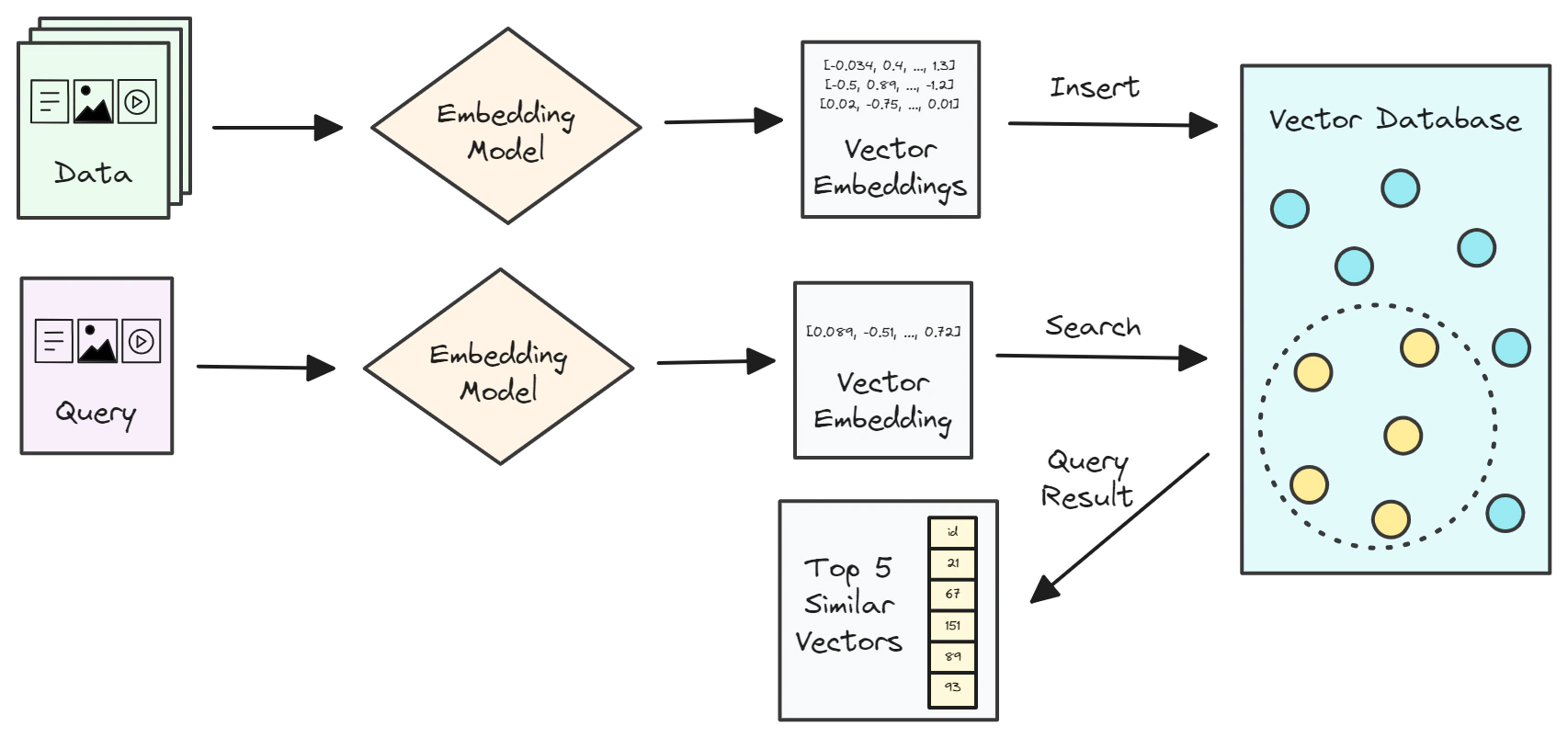

Vector search works by transforming data, such as text, images, videos, and audio, into a numerical representation that is called vector embedding and applying nearest neighbor algorithms to find similar data.

A few days ago, I had a wonderful time delivering a session about building a vector search system using Azure Cosmos DB for PostgreSQL and Azure AI Vision at the monthly Azure Cosmos DB Usergroup’s virtual show hosted by Jay Gordon and Microsoft Reactor. In this article, I will document the main points of my session and provide you with learning resources and code examples.

You will:

Before you start, follow these steps to set up your workspace:

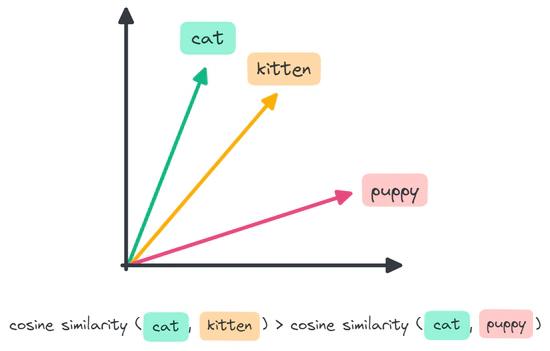

In simple terms, vector embeddings are numerical representations of data, such as images, text, videos, and audio. These vectors are high-dimensional dense vectors, with each dimension containing information about the original content. By translating data into vectors, computers can capture the meaning of the data and understand the semantic similarity between two objects. We can quantify semantic similarity of two objects by their proximity in a vector space.

We can measure the semantic similarity by using a distance metric such as Euclidean distance, inner product, or cosine distance. In the following examples, we will use the cosine similarity, which is defined as the cosine of the angle between the two vectors.

There are numerous embedding models available, including OpenAI, Hugging Face, and Azure AI Vision. Azure AI Vision (formerly known as Azure Computer Vision) provides two Image Retrieval APIs for vectorizing image and text queries: the Vectorize Image API and the Vectorize Text API. This vectorization converts images and text into coordinates in a 1024-dimensional vector space, enabling users to search a collection of images using text and/or images without the need for metadata, such as image tags, labels, or captions.

A vector search system works by comparing the vector embedding of a user’s query with a set of pre-stored vector embeddings to find a list of vectors that are the most similar to the query vector. The diagram below illustrates the workflow.

Vector embeddings can be stored in a vector database, which is a specialized type of database optimized for storing and querying vectors with a large number of dimensions.

In the following video, you can learn more about vector embeddings and vector similarity search with Azure Cosmos DB for PostgreSQL and Azure AI Vision and walk through the Jupyter Notebooks that are available on my GitHub repository.

In my GitHub repository, you can find some Jupyter Notebooks to help you gain hands-on experience with the concepts introduced in the above video. You may want to refer to the README of the project for instructions on how to set up your Azure resources.

In the quickstart, you will explore the Image Retrieval APIs of Azure AI Vision and the basics of the pgvector extension. You will build a simple app to search a collection of images from a wide range of natural scenes. The images were taken from Kaggle.

In this extended scenario, you will:

If you’d like to dive deeper into this topic, here are some helpful resources.

My presentation about vector search with Azure AI Vision and Azure Cosmos DB for PostgreSQL at the virtual show MVP TechBytes.

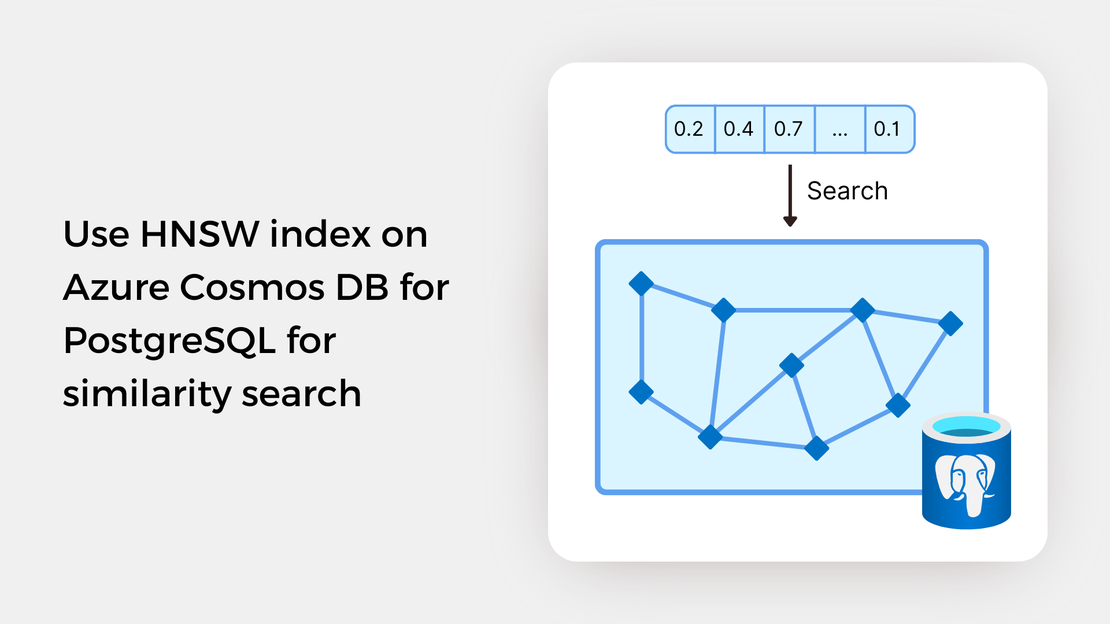

Explore vector similarity search using the Hierarchical Navigable Small World (HNSW) index of pgvector on Azure Cosmos DB for PostgreSQL.

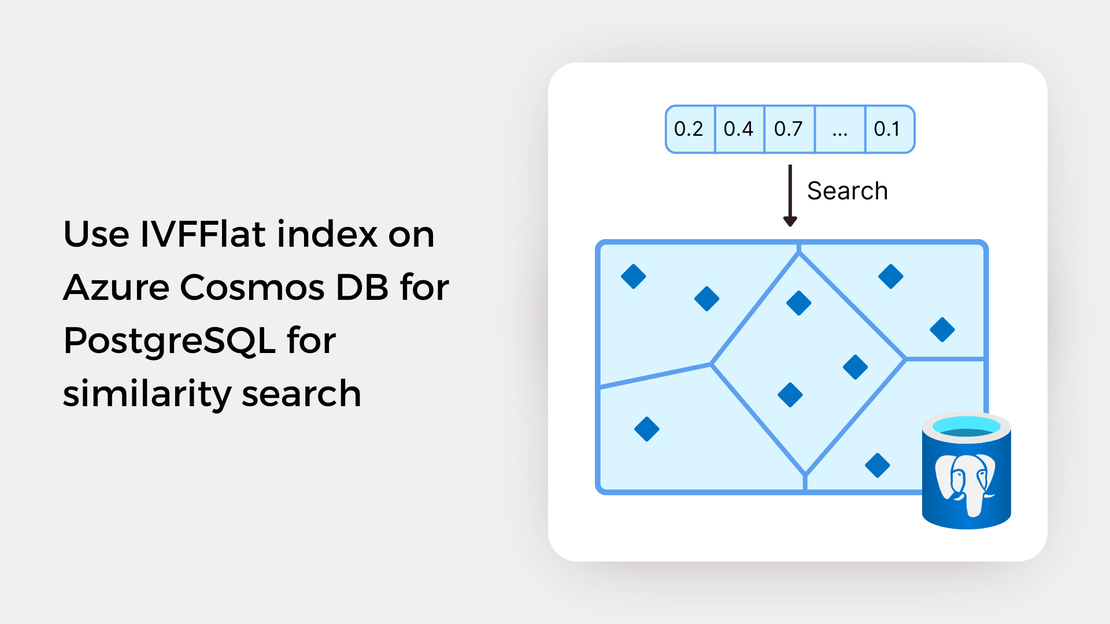

Explore vector similarity search using the Inverted File with Flat Compression (IVFFlat) index of pgvector on Azure Cosmos DB for PostgreSQL.

Learn how to write SQL queries to search for and identify images that are semantically similar to a reference image or text prompt using pgvector.