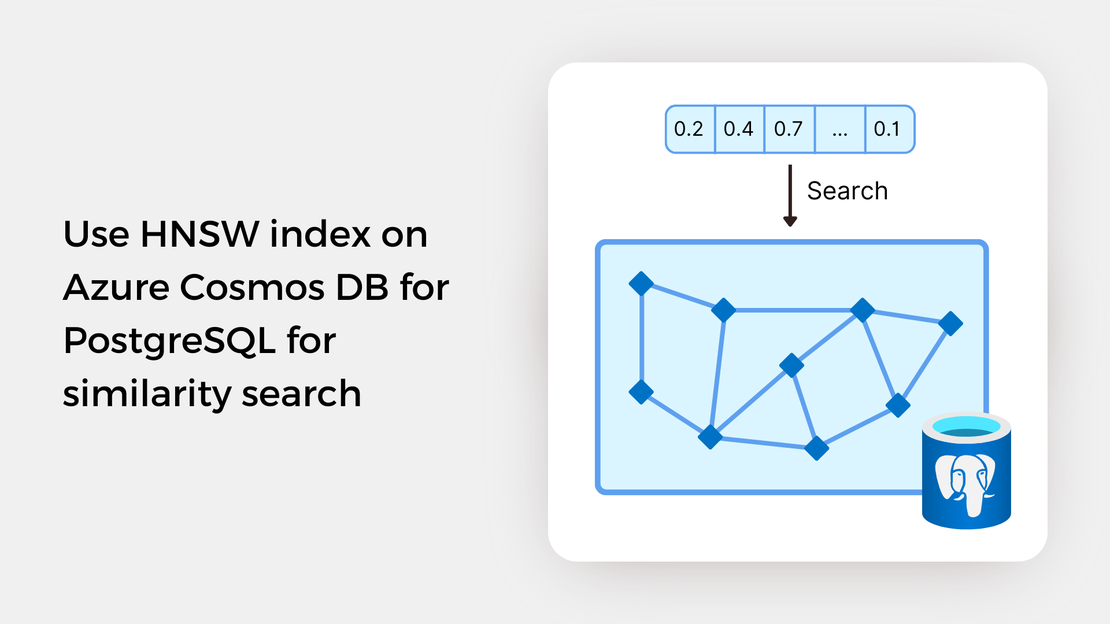

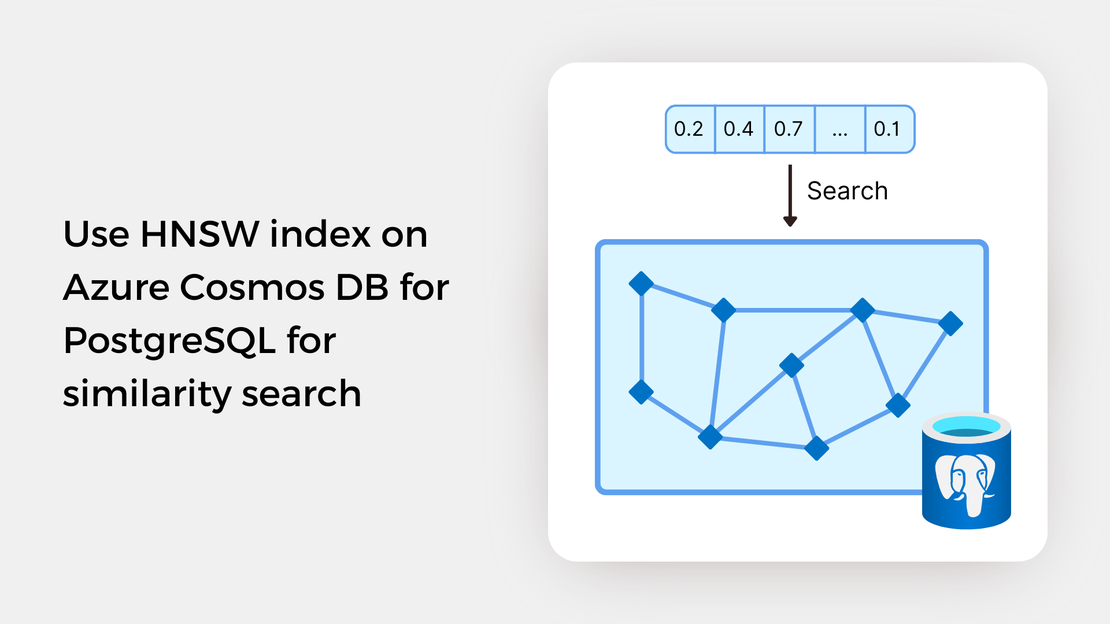

Use HNSW index on Azure Cosmos DB for PostgreSQL for similarity search

Explore vector similarity search using the Hierarchical Navigable Small World (HNSW) index of pgvector on Azure Cosmos DB for PostgreSQL.

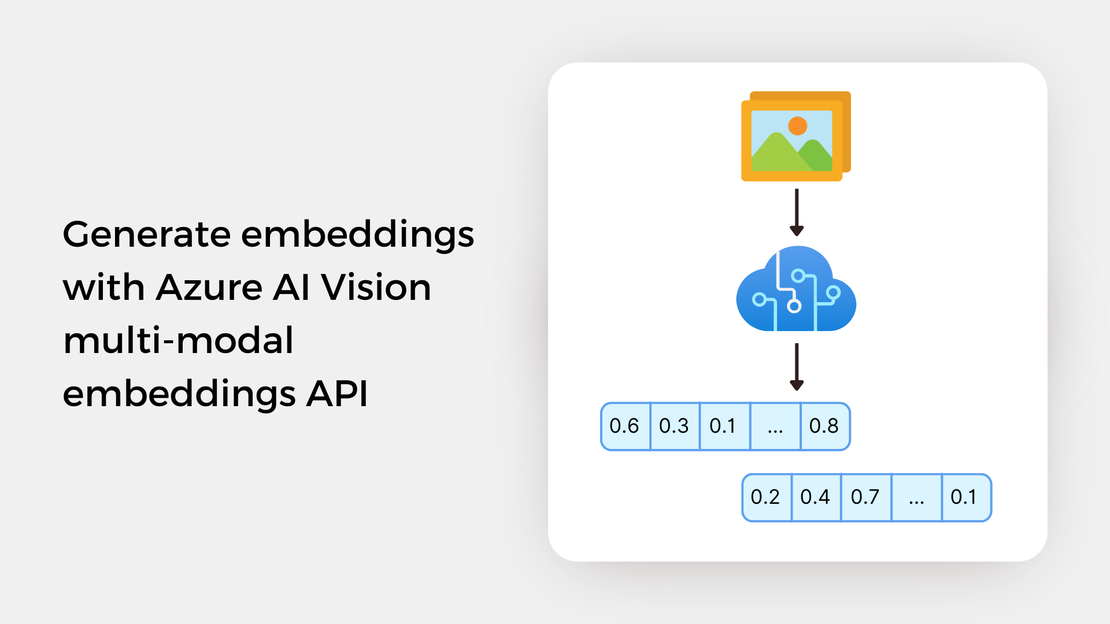

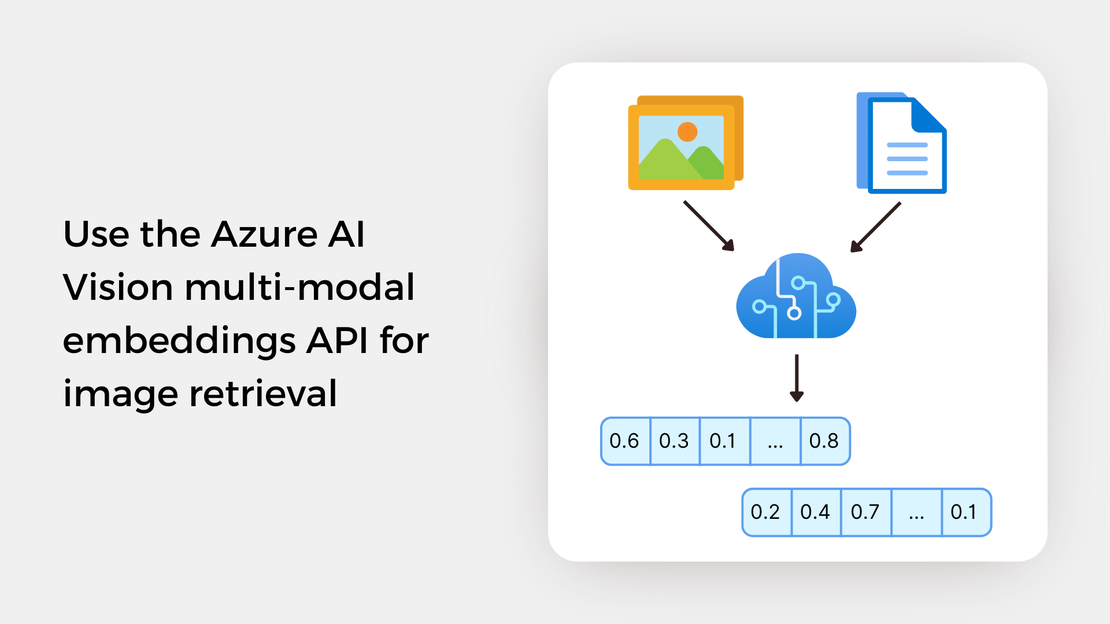

Welcome to the second part of the “Image similarity search with pgvector” learning series! In the previous article, you learned how to describe vector embeddings and vector similarity search. You also used the multi-modal embeddings APIs of Azure AI Vision for generating embeddings for images and text and calculated the cosine similarity between two vectors.

In this learning series, we will create an application that enables users to search for paintings based on either a reference image or a text description. We will use the SemArt Dataset, which contains approximately 21k paintings gathered from the Web Gallery of Art. Each painting comes with various attributes, like a title, description, and the name of the artist.

In this tutorial, you will:

To proceed with this tutorial, ensure that you have the following prerequisites installed and configured:

In this article, you will find instructions on how to generate embeddings for a collection of images using Azure AI Vision. The complete working project can be found in the GitHub repository. If you want to follow along, you can fork the repository and clone it to have it locally available.

Before running the scripts, you should:

Download the SemArt Dataset into the semart_dataset directory.

Create a virtual environment and activate it.

Install the required Python packages using the following command:

| |

For our application, we’ll be working with a subset of the original dataset. Alongside the image files, we aim to retain associated metadata like the title, author’s name, and description for each painting. To prepare the data for further processing and eliminate unnecessary information, we will take several steps as outlined in the Jupyter Notebook available on my GitHub repository:

After these steps, the final dataset will comprise 11,206 images of paintings.

To generate embeddings for the images, our process can be summarized as follows:

In the following sections, we will discuss specific segments of the code.

As discussed in Part 1, computing the vector embedding of an image involves sending a POST request to the Azure AI Vision retrieval:vectorizeImage API. The binary image data (or a publicly available image URL) is included in the request body, and the response consists of a JSON object containing the vector embedding of the image. In Python, this can be achieved by utilizing the requests library to send a POST request.

| |

The compute_embeddings function computes the vector embeddings for all the images in our dataset. It uses the ThreadPoolExecutor object to generate vector embeddings for each batch of images efficiently, utilizing multiple threads. The tqdm library is also utilized in order to provide progress bars for better visualizing the embeddings generation process.

| |

Once the embeddings for all the images in a batch are computed, the data is saved into a CSV file.

| |

Azure AI Vision API imposes rate limits on its usage. In the free tier, only 20 transactions per minute are allowed, while the standard tier allows up to 30 transactions per second, depending on the operation (Source: Microsoft Docs). If you exceed the default rate limit, you’ll receive a 429 HTTP error code.

For our application, it is recommended to use the standard tier during the embeddings generation process and limit the number of requests per second to approximately 10 to avoid potential issues.

After computing the vector embeddings for all images in the dataset, we proceed to update our dataset by inserting the vector embedding for each image. In the generate_dataset function, the merge method of pandas.DataFrame is used for merging the dataset with a database-style join.

| |

In this post, we computed vector embeddings for a set of images featuring paintings using the Azure AI Vision Vectorize Image API. The code shared here serves as a reference, and you can customize it to suit your particular use case.

Here are some additional learning resources:

Explore vector similarity search using the Hierarchical Navigable Small World (HNSW) index of pgvector on Azure Cosmos DB for PostgreSQL.

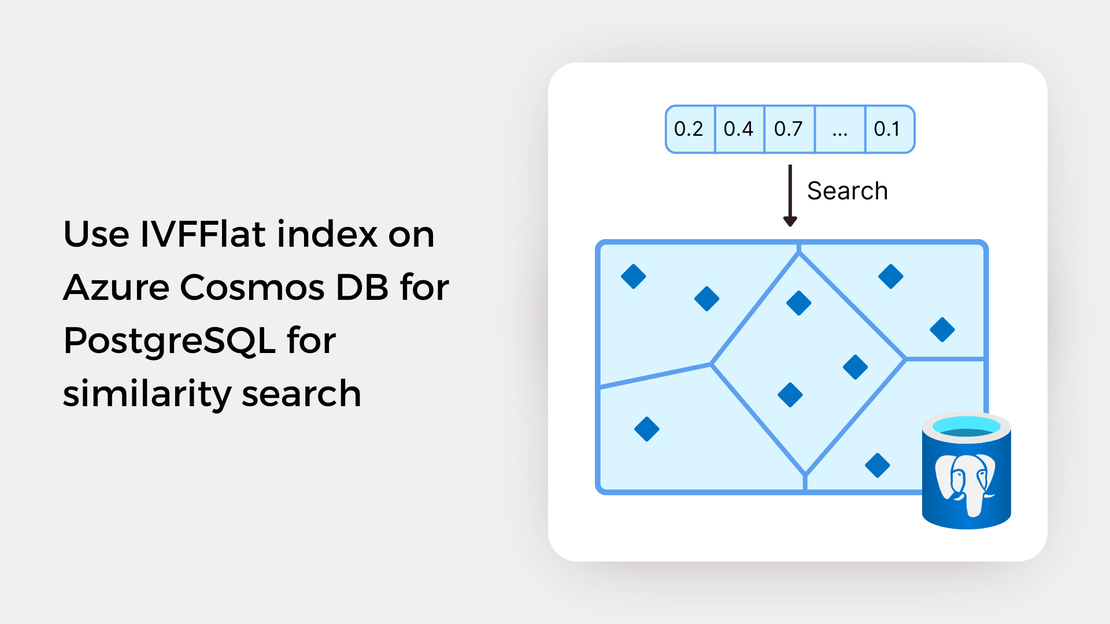

Explore vector similarity search using the Inverted File with Flat Compression (IVFFlat) index of pgvector on Azure Cosmos DB for PostgreSQL.

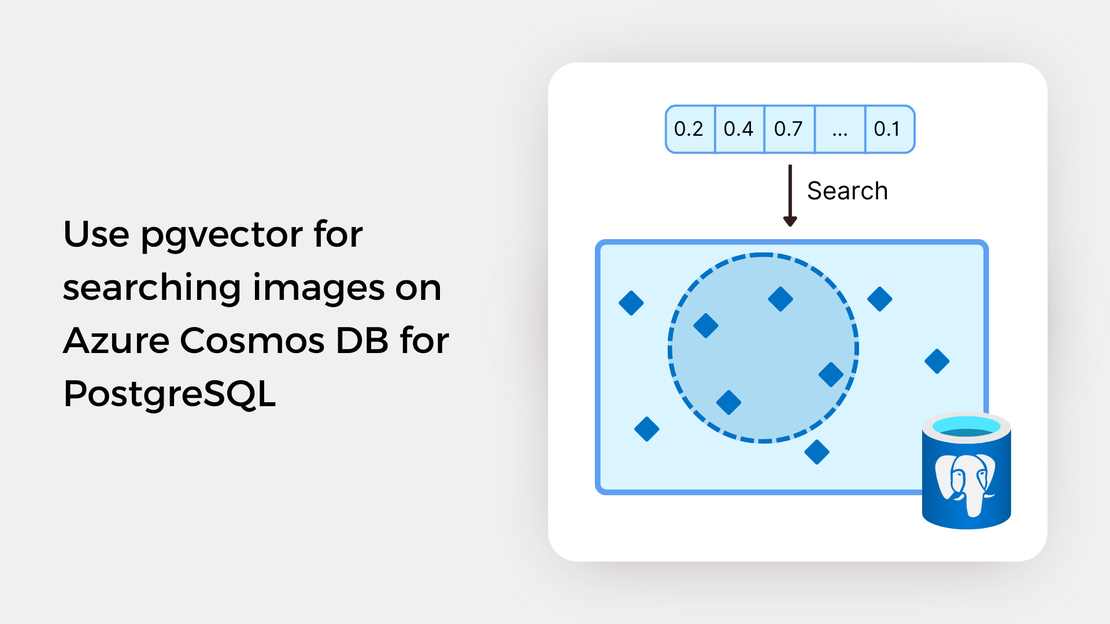

Learn how to write SQL queries to search for and identify images that are semantically similar to a reference image or text prompt using pgvector.

Explore the basics of vector search and generate vector embeddings for images and text using the Azure AI Vision multi-modal embeddings APIs.