Serverless image classification with Azure Functions and Custom Vision – Part 4

In this article, you will deploy a function project to Azure using Visual Studio Code to create a serverless HTTP API.

Welcome to the new series focused on Azure Custom Vision and Azure Functions! In this series, you are going to train a TensorFlow image classification model in Azure Custom Vision and run the model in an Azure Function.

In the first part, you will build an image classification model using the Custom Vision service. You will learn how to:

To complete the exercise, you will need:

You will also need to install Python 3 and Visual Studio Code or another code editor.

You are working at an Animal shelter, and you want to build a registration app for new animals that will automatically classify the animals based on the uploaded images. You are going to use Azure Custom Vision to train a “Cats and Dogs” classification model and deploy the model in an Azure Function. The function will be invoked via HTTP requests.

Azure Custom Vision is an Azure Cognitive Services service that lets you build and deploy your own image classification and object detection models. You can train your models using either the Custom Vision web-based interface or the Custom Vision client library SDKs. In this article, we will use Python and Visual Studio code to train our Custom Vision model.

Do you want to use the web-based interface to build your model? You can read my previous article about creating a Custom Vision model for flower classification.

Images of cats and dogs were taken from the Kaggle Cats and Dogs Dataset. This dataset is composed of over three million images of cats and dogs, manually classified by people at thousands of animal shelters across the US. To build and train our Custom Vision model, we will only consider 120 images per class.

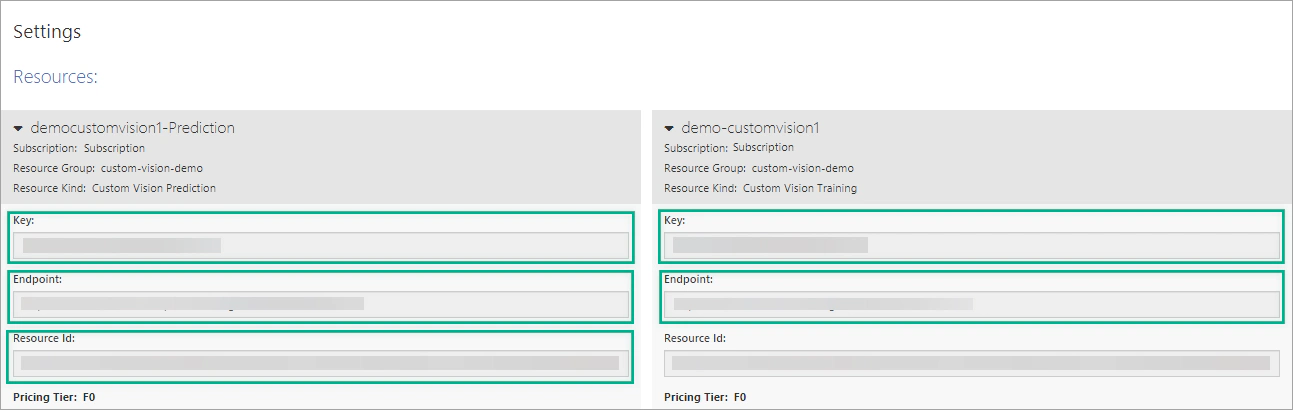

Once you have created a Custom Vision resource in the Azure portal, you will need the key and endpoint of your training and prediction resources to connect your Python application to Custom Vision. You can find these values both in the Azure portal and the Custom Vision web portal.

Navigate to customvision.ai and sign in.

Click the settings icon (⚙) at the top toolbar.

Expand your prediction resource and save the Key, the Endpoint, and the Prediction resource ID.

Expand the training resource and save the Key and the Endpoint.

To create an image classification project with Custom Vision for Python, you’ll need to install the Custom Vision client library. Install the Azure Cognitive Services Custom Vision SDK for Python package with pip:

| |

Create a configuration file (.env) and save the Azure’s keys and endpoints you copied in the previous step.

Want to view the whole code at once? You can find it on GitHub.

Create a new Python file (app.py) and import the following libraries:

| |

Add the following code to load the keys and endpoints from the configuration file.

| |

Use the following code to create a CustomVisionTrainingClient and CustomVisionPredictionClient object. You will use the trainer object to create a new Custom Vision project and train an image classification model and the predictor object to make a prediction using the published endpoint.

| |

To create a new Custom Vision project, you use the create_project method of the trainer object. By default, the domain of the new project is set to General. Since we want to export our model, we should select one of the compact domains.

Add the following code to create a new Multiclass classification project in the General (compact) domain.

| |

The images that we will use to build our model are grouped into 2 classes (tags): cat and dog. Insert the following code after the project creation. This code adds classification tags to the project using the create_tag method and specifies the description of the tags.

| |

The following code uploads the training images and their corresponding tags. In each batch, it uploads 60 images of the same tag.

| |

Use the train_project method of the trainer object to train your model using all the images in the training set.

| |

The Custom Vision service calculates three performance metrics:

The following code displays performance information for the latest training iteration and for each tag using a threshold value equal to 50%. You can also use the get_iteration_performance method to get the standard deviation of the above-mentioned performance values.

| |

If you want to test your model before publishing it, you can use the quick_test_image method of the trainer object.

| |

Add the following code, which publishes the current iteration. Once the iteration is published, you can use the prediction endpoint to make predictions.

| |

Use the classify_image method of the predictor object to send a new image for analysis and retrieve the prediction result. Add the following code to the end of the program and run the application.

| |

If the application ran successfully, navigate to the Custom Vision website to see the newly created project.

In this article, you learned how to use the Azure Custom Vision SDK for Python to build and train an image classification model. In the second part of this learning series, I will show you how to export your image classifier using the Python SDK and run the exported TensorFlow model locally to predict whether an image contains a cat or a dog.

You may also check out the following resources:

If you want to delete this project, navigate to the Custom Vision project gallery page and select the trash icon under the project.

If you have finished learning, you can delete the resource group from your Azure subscription:

In this article, you will deploy a function project to Azure using Visual Studio Code to create a serverless HTTP API.

In this article, you will create a Python Azure Function with HTTP trigger to consume a TensorFlow machine learning model.

This article will show you how to export your classifier using the Custom Vision SDK for Python and use the model to classify images.

In this article, you will learn how to export a Custom Vision model programmatically using the Python client library.