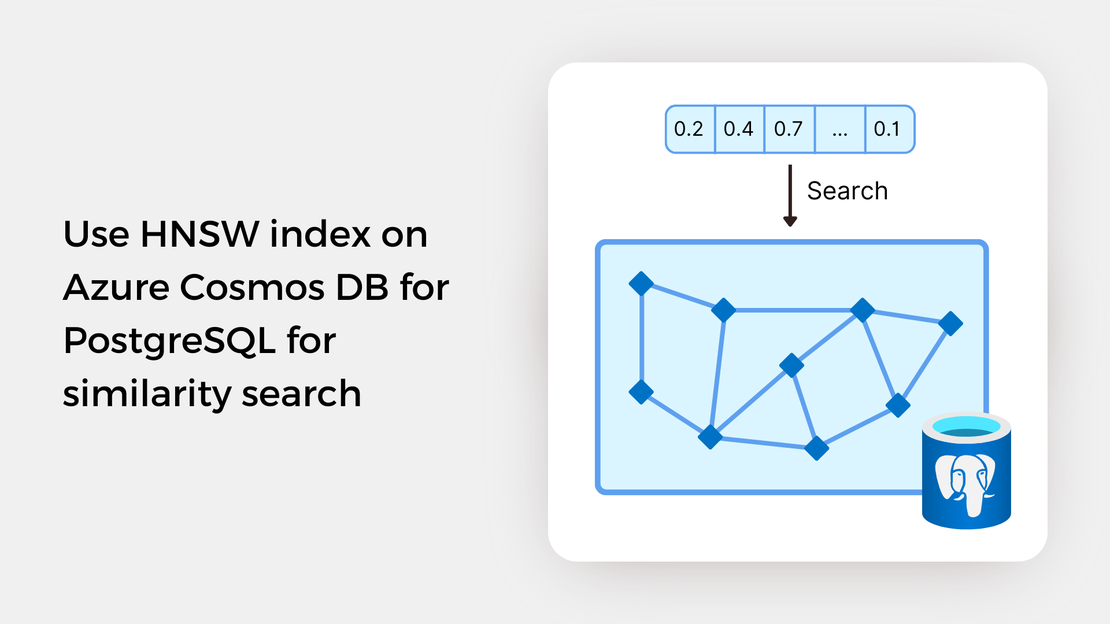

Use HNSW index on Azure Cosmos DB for PostgreSQL for similarity search

Explore vector similarity search using the Hierarchical Navigable Small World (HNSW) index of pgvector on Azure Cosmos DB for PostgreSQL.

Welcome to the next part of the “Image similarity search with pgvector” learning series! In the previous articles, you used the multi-modal embeddings APIs of Azure AI Vision for generating embeddings for a collection of images of paintings and stored the embeddings in an Azure Cosmos DB for PostgreSQL table.

To find out more about previous posts, check out the links below:

If you have followed the previous posts, you should have successfully created a table in your Azure Cosmos DB for PostgreSQL cluster, populated it with data, and uploaded the images to a container in your Azure Storage account. Now, you are fully prepared to search for similar images utilizing the vector similarity search features of the pgvector extension.

In this tutorial, you will:

To proceed with this tutorial, ensure that you have the following prerequisites installed and configured:

In this guide, you’ll learn how to query embeddings stored in an Azure Cosmos DB for PostgreSQL table to search for images similar to a search term or a reference image. The entire functional project is available in the GitHub repository. If you’re keen on trying it out, just fork the repository and clone it to have it locally available.

Before running the Jupyter Notebook covered in this post, you should:

Create a virtual environment and activate it.

Install the required Python packages using the following command:

| |

Create vector embeddings for a collection of images by running the scripts found in the data_processing directory.

Upload the images to your Azure Blob Storage container, create a PostgreSQL table, and populate it with data by executing the scripts found in the data_upload directory.

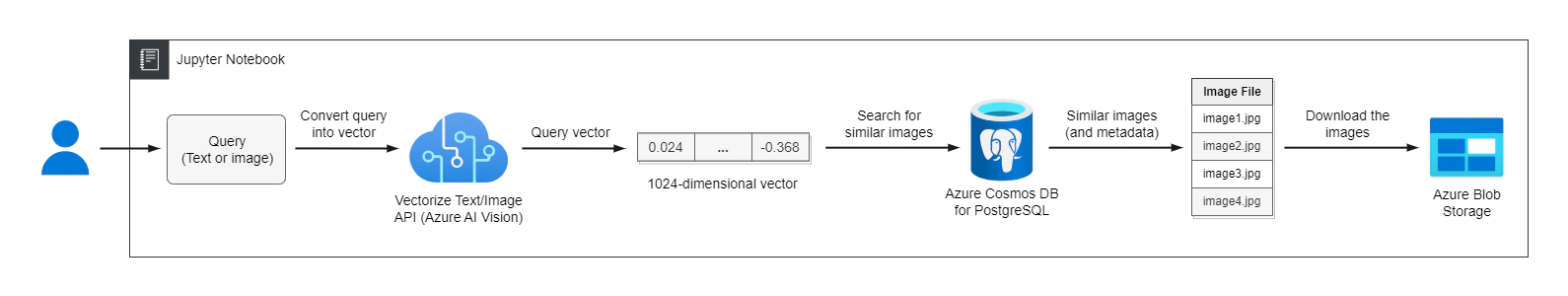

The image similarity search workflow that we will follow is summarized as follows:

SELECT statements and the built-in vector operators of the PostgreSQL database. Specifically, cosine similarity will be used as the similarity metric.This workflow is illustrated in the following diagram:

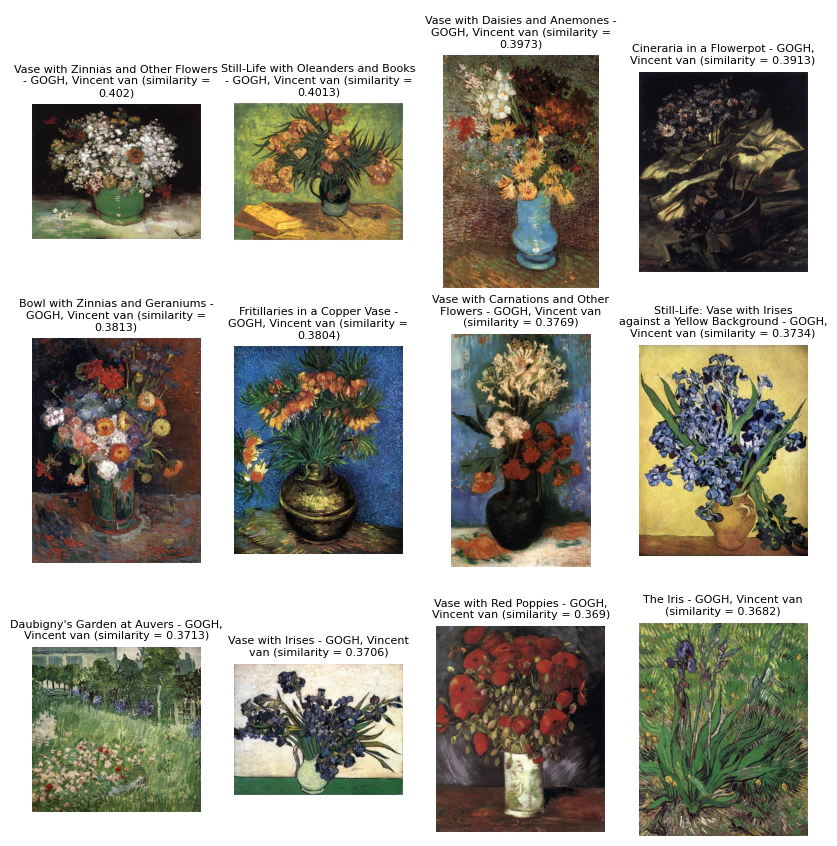

Given the vector embedding of the query, we can use SQL SELECT statements to search for similar images. Let’s understand how a simple SELECT statement works. Consider the following query:

| |

This query computes the cosine distance (<=>) between the given vector ([0.003, …, 0.034]) and the vectors stored in the table, sorts the results by the calculated distance, and returns the five most similar images (LIMIT 5). Additionally, you can obtain the cosine similarity between the query vector and the retrieved vectors by modifying the SELECT statement as follows:

| |

The pgvector extension provides 3 operators that can be used to calculate similarity:

| Operator | Description |

|---|---|

<-> | Euclidean distance |

<#> | Negative inner product |

<=> | Cosine distance |

In the Jupyter Notebook provided on my GitHub repository, you’ll explore the following scenarios:

Feel free to experiment with the notebook and modify the code to gain hands-on experience with the pgvector extension!

In this post, you explored the basic vector similarity search features offered by the pgvector extension. This type of vector search is referred to as exact nearest neighbor search, as it computes the similarity between the query vector and every vector in the database. In the upcoming post, you will explore approximate nearest neighbor search, which trades off result quality for speed.

If you want to explore pgvector’s features, check out these learning resources:

Explore vector similarity search using the Hierarchical Navigable Small World (HNSW) index of pgvector on Azure Cosmos DB for PostgreSQL.

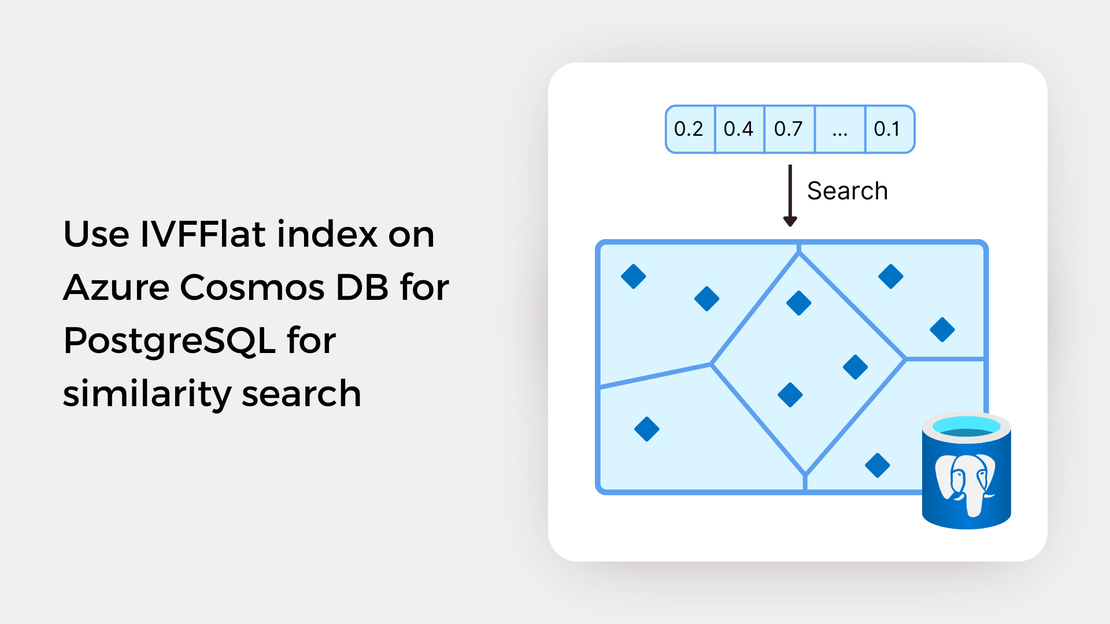

Explore vector similarity search using the Inverted File with Flat Compression (IVFFlat) index of pgvector on Azure Cosmos DB for PostgreSQL.

My presentation about vector search with Azure AI Vision and Azure Cosmos DB for PostgreSQL at the Azure Cosmos DB Usergroup.

My presentation about vector search with Azure AI Vision and Azure Cosmos DB for PostgreSQL at the virtual show MVP TechBytes.